Below is a for loop that iterates through table rows and prints out the cells of the rows. To get there, you should get all table rows in list form first and then convert that list into a dataframe. The goal of this tutorial is to take a table from a webpage and convert it into a dataframe for easier manipulation using Python. # Print the first 10 rows for sanity check To print out table rows only, pass the 'tr' argument in soup.find_all(). You can use a for loop and the get('"href") method to extract and print out only hyperlinks. These attributes provide additional information about html elements. soup.find_all('a')Īs you can see from the output above, html tags sometimes come with attributes such as class, src, etc.

WEBSCRAPER PACKAGE PYTHON HOW TO

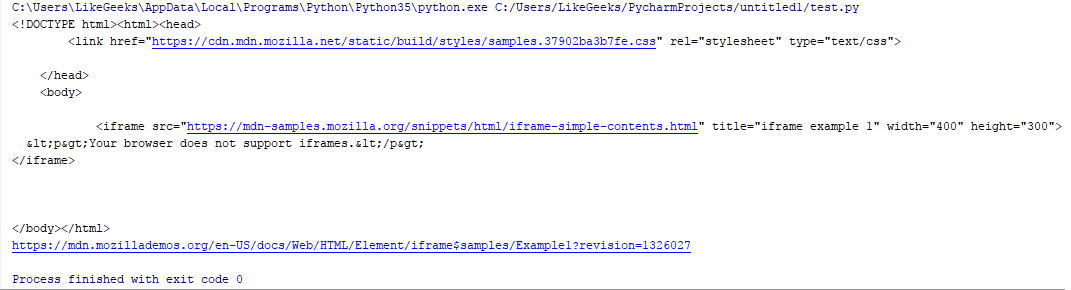

The code below shows how to extract all the hyperlinks within the webpage. Examples of useful tags include for hyperlinks, for tables, for table rows, for table headers, and for table cells. You can use the find_all() method of soup to extract useful html tags within a webpage. You can view the html of the webpage by right-clicking anywhere on the webpage and selecting "Inspect." This is what the result looks like. You can also get the text of the webpage and quickly print it out to check if it is what you expect. # Get the titleĢ017 Intel Great Place to Run 10K \ Urban Clash Games Race Results The soup object allows you to extract interesting information about the website you're scraping such as getting the title of the page as shown below. The second argument 'lxml' is the html parser whose details you do not need to worry about at this point. The Beautiful Soup package is used to parse the html, that is, take the raw html text and break it into Python objects. This is done by passing the html to the BeautifulSoup() function. Next step is to create a Beautiful Soup object from the html. Getting the html of the page is just the first step.

from urllib.request import urlopenĪfter importing necessary modules, you should specify the URL containing the dataset and pass it to urlopen() to get the html of the page.

The Beautiful Soup library's name is bs4 which stands for Beautiful Soup, version 4. The Beautiful Soup package is used to extract data from html files. The urllib.request module is used to open URLs. To perform web scraping, you should also import the libraries shown below. To easily display the plots, make sure to include the line %matplotlib inline as shown below. If you don't have Jupyter Notebook installed, I recommend installing it using the Anaconda Python distribution which is available on the internet. Using Jupyter Notebook, you should start by importing the necessary modules (pandas, numpy, matplotlib.pyplot, seaborn).

WEBSCRAPER PACKAGE PYTHON DOWNLOAD

Let's say you find data from the web, and there is no direct way to download it, web scraping using Python is a skill you can use to extract the data into a useful form that can be imported. Whether you are a data scientist, engineer, or anybody who analyzes large amounts of datasets, the ability to scrape data from the web is a useful skill to have. Web scraping is a term used to describe the use of a program or algorithm to extract and process large amounts of data from the web.

0 kommentar(er)

0 kommentar(er)